Components of an information management strategy

Before implementing new processes, it's important to understand the different parts and pieces of data management

By David Loshin

There are two tracks of business drivers for deploying best practices in information management across the enterprise. First, there are common demands that are shared across many different industries, such as the need for actionable knowledge about customers and products to drive increased revenues and lengthened customer relationships. Second, there are characteristics for operational and analytical needs associated with specific industries.

Each of these business drivers points to the need for increased agility and maturity in coupling well-defined information management practices with the technologies that compose an end-to-end information management framework.

Data integration has become the lifeblood of the enterprise.

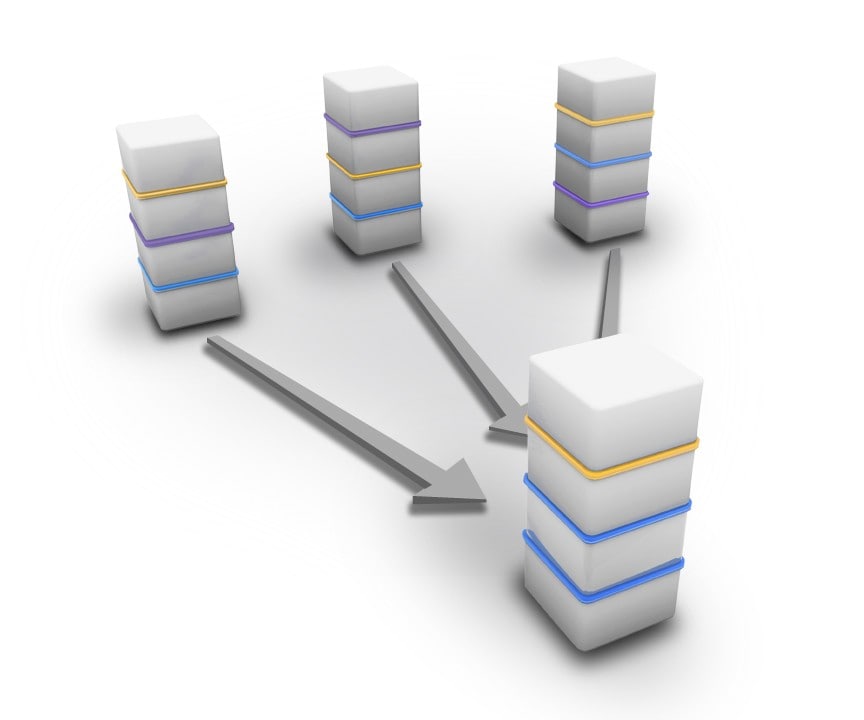

Data integration

Data integration has become the lifeblood of the enterprise. Organizations continually recognize how critical it is to share data across business functions, and that suggests a continued need for increasing reliability, performance, and access speed for data integration, particularly in these fundamental capabilities:

- Data accessibility – Organizations must support a vast landscape of legacy data systems, especially due to the desire to scan historical data assets for potential business value. One key aspect of data integration is accessibility, and the information management framework going forward must provide connectors to that wide variety of data sources, including file-based, tree-structured data sets, relational databases, and even streamed data sources.

- Data transformation, exchange and delivery – Once data sets can be accessed from their original sources, the data integration framework must be able to efficiently move the data from source to target. There must be a capability to transform the data from its original format into one that is suited to the target, with a means of verifying that the data sets are appropriately packaged and delivered.

- Data replication and change data capture – The need to regulate the accessibility and delivery of ever-growing data volumes within expected time frames is impeded by data delivery bottlenecks, especially in periodic extractions from source systems and loading into data warehouses. Data replication techniques enable rapid bulk transfers of large data sets. You can synchronize the process by trickle-feeding changes using a method known as “change data capture” that monitors system logs and triggers updates to the target systems as changes happen in the source.

Data virtualization

Efficient data integration can address some of the issues associated with increasing demands for accessing data from numerous sources and of varied structure and format. Yet some complications remain in populating data warehouses in a timely and consistent manner that meets the performance requirements of consuming systems. When the impediments are linked to the complexity of extraction and transformation in a synchronous manner, you run the risk of timing and synchronization issues that lead to inconsistencies between the consumers of data and the original source systems.

One way to address this is by reducing the perception of data latency and asynchrony. Data virtualization techniques have been evolved and matured to address these concerns. Data virtualization tools and techniques provide three key capabilities:

- Federation – They enable federation of heterogeneous sources by mapping a standard or canonical data model to the access methods for the variety of sources comprising the federated model.

- Caching – By managing accessed and aggregated data within a virtual (“cached”) environment, data virtualization reduces data latency, thereby increasing system performance.

- Consistency – Together, federation and virtualization abstract the methods for access and combine them with the application of standards for data validation, cleansing and unification.

A virtualized data environment can simplify how the end-user applications and business data analysts access data without forcing them to be aware of source data locations, data integration, or application of business rules.

Event stream processing

Traditional business intelligence systems may be insufficient to address the active capturing, monitoring, and correlation of real-time event information into actionable knowledge. To address this, a technique called event stream processing (ESP) enables real-time monitoring of patterns and sequences of events flowing through streams of information.

ESP systems help organizations rapidly respond to emerging opportunities that can result from the confluence of multiple streams of information. These systems allow information management professionals to model how participants within an environment are influenced by many different data input streams, and analyze patterns that trigger desired outcomes. ESP systems can continuously monitor (in real time) all potentially influential streams of events against the expected patterns and provide low-latency combination and processing of events within defined event windows. When there is a variance from expectations or identification of new opportunities, the systems can generate alerts to the right individuals who can take action much more rapidly than in a traditional data analysis scenario.

ESP networks can monitor high data volumes from multiple input data sources with very low latencies for event processing. The ability to continuously monitor a wide variety of streaming inputs in a scalable manner allows you to recognize and respond to emerging scenarios because of the lower latencies and turnaround time for analysis. In essence, instead of running dynamic queries against static data, one can look at ESP as a method for simultaneously searching through massive amounts of dynamic data for many defined patterns.

Metadata management

The drive for cross-functional data sharing and exchange exposed the inherent inconsistencies associated with data systems designed, developed, and implemented separately within functional silos. And since early metadata management approaches only focused on structural, technical aspects of data models (to the exclusion of the meanings and semantics that are relevant to the business), metadata management projects often floundered. That says that the modern enterprise information management environment must enable business-oriented metadata management, including tools and methods for:

- Business term glossaries to capture frequently-used business terms and their authoritative definition(s)

- Data standards such as naming conventions, defined reference data sets, and standards for storage and exchange

- Data element definitions that reflect the connection to business terms and provide context-relevant definitions for use within business applications

- Data lineage that shows the relationships between data element concepts and their representation across different models and applications

- Integration with data governance policies to support validation, compliance, and control

Data quality management

Best practices for data quality management are intended to help organizations improve the precision of identifying data flaws and errors as well as simplify the analysis and remediation of root causes of data flaws. At the same time, data quality tools and techniques must support the ability to standardize and potentially correct data when possible, flag issues when they are identified, notify the appropriate data steward, and facilitate the communication of potential data issues to the source data providers. These objectives can be met within a formal framework for data quality management that incorporates techniques for:

- Data parsing and standardization – Scanning data values with the intent of transforming non-standard representations into standard formats.

- Data correction and cleansing – Applying data quality rules to correct recognized data errors as a way of cleansing the data and eliminating inconsistencies.

- Data quality rules management – Centrally manage data quality requirements and rules for validation and verification of compliance with data expectations.

- Data quality measurement and reporting – Provide a framework for invoking services to validate data against data rules and report anomalies and data flaws.

- Standardized data integration validation – Continual validation of existing data integration processes and embedded verification of newly-developed data integration processes.

- Data quality assessment – Source data assessment and evaluation of data issues to identify potential data quality rules using data profiling and other statistical tools.

- Incident management – Standardized approaches to data quality incident management (reporting, analysis/evaluation, prioritization, remediation, tracking).

Data governance

Finally, no modern enterprise information management environment would be complete without the techniques for validating data rules and compliance with data policies. At the very least, that would be supported with tools for managing the data policy life cycle, which would include drafting policies, proposing policies to the data governance committee, providing reviews and revisions, seeking approval, and moving rules into production.

These tasks must be aligned with the design and development tasks within the organization’s system development life cycle. This permeates the lifetime of information management from the analysis and synthesis of data consumer requirements through conceptual modeling, logical and physical design, and subsequent implementation.

David Loshin, president of Knowledge Integrity, Inc., is a recognized thought leader and expert consultant in the areas of data quality, master data management and business intelligence.

Read More

- More data management insights.